Device Allows Users to Manipulate 3-D Virtual Objects More Quickly

For Immediate Release

Researchers at North Carolina State University have developed a user-friendly, inexpensive controller for manipulating virtual objects in a computer program in three dimensions. The device allows users to manipulate objects more quickly – with less lag time – than existing technologies.

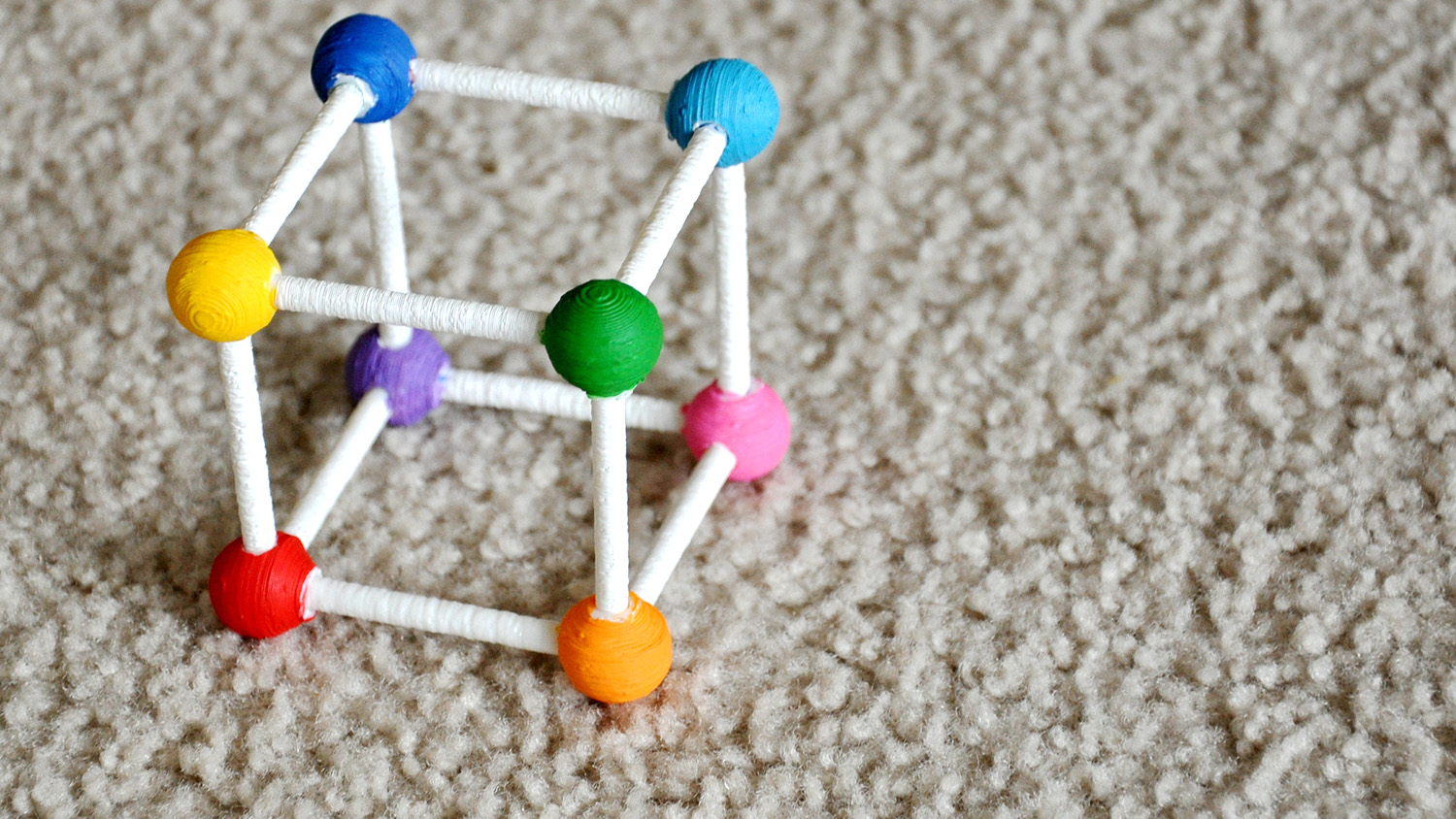

The device, called CAPTIVE, offers six degrees of freedom (6DoF) for users – with applications ranging from video gaming to medical diagnostics to design tools. And CAPTIVE makes use of only three components: a simple cube, the webcam already found on most smartphones and laptops, and custom software.

The cube is plastic, with differently colored balls at each corner. It resembles a Tinkertoy, but is made using a 3-D printer. When users manipulate the cube, the image is captured by the webcam. Video recognition software tracks the movement of the cube in three dimensions by tracking how each of the colored balls moves in relation to the others. Video demonstrating CAPTIVE can be seen here: https://youtu.be/gRN5bYtYe3M.

“The primary advantage of CAPTIVE is that it is efficient,” says Zeyuan Chen, lead author of a paper on the work and a Ph.D. student in NC State’s Department of Computer Science. “There are a number of tools on the market that can be used to manipulate 3-D virtual objects, but CAPTIVE allows users to perform these tasks much more quickly.”

To test CAPTIVE’s efficiency, researchers performed a suite of standard experiments designed to determine how quickly users can complete a series of tasks.

The researchers found, for example, that CAPTIVE allowed users to rotate objects in three dimensions almost twice as fast as what is possible with competing technologies.

“Basically, there’s no latency; no detectable lag time between what the user is doing and what they see on screen,” Chen says.

CAPTIVE is also inexpensive compared to other 6DoF input devices.

“There are no electronic components in the system that aren’t already on your smartphone, tablet or laptop, and 3-D printing the cube is not costly,” Chen says. “That really leaves only the cost of our software.”

The paper, “Performance Characteristics of a Camera-Based Tangible Input Device for Manipulation of 3D Information,” will be presented at the Graphics Interface conference being held in Edmonton, Alberta, May 16-19. The paper was co-authored by Christopher Healey, a professor of computer science at NC State and in the university’s Institute for Advanced Analytics; and Robert St. Amant, an associate professor of computer science at NC State. The work was done with support from the National Science Foundation under grant number 1420159.

-shipman-

Note to Editors: The study abstract follows.

“Performance Characteristics of a Camera-Based Tangible Input Device for Manipulation of 3D Information”

Authors: Zeyuan Chen, Christopher G. Healey and Robert St. Amant, North Carolina State University

Presented: May 16-19, Graphics Interface 2017, Edmonton, Alberta

Abstract: This paper describes a prototype tangible six degree of freedom (6 DoF) input device that is inexpensive and intuitive to use: a cube with colored corners of specific shapes, tracked by a single camera, with pose estimated in real time. A tracking and automatic color adjustment system are designed so that the device can work robustly with noisy surroundings and is invariant to changes in lighting and background noise. A system evaluation shows good performance for both refresh (above 60 FPS on average) and accuracy of pose estimation (average angular error of about 1°). A user study of 3D rotation tasks shows that the device outperforms other 6 DoF input devices used in a similar desktop environment. The device has the potential to facilitate interactive applications such as games as well as viewing 3D information.

- Categories: