For Immediate Release

Photos are two-dimensional (2D), but autonomous vehicles and other technologies have to navigate the three-dimensional (3D) world. Researchers have developed a new method to help artificial intelligence (AI) extract 3D information from 2D images, making cameras more useful tools for these emerging technologies.

“Existing techniques for extracting 3D information from 2D images are good, but not good enough,” says Tianfu Wu, co-author of a paper on the work and an associate professor of electrical and computer engineering at North Carolina State University. “Our new method, called MonoXiver, can be used in conjunction with existing techniques – and makes them significantly more accurate.”

The work is particularly useful for applications such as autonomous vehicles. That’s because cameras are less expensive than other tools used to navigate 3D spaces, such as LIDAR, which relies on lasers to measure distance. Because cameras are more affordable than these other technologies, designers of autonomous vehicles can install multiple cameras, building redundancy into the system. But that’s only useful if the AI in the autonomous vehicle can extract 3D navigational information from the 2D images taken by a camera. This is where MonoXiver comes in.

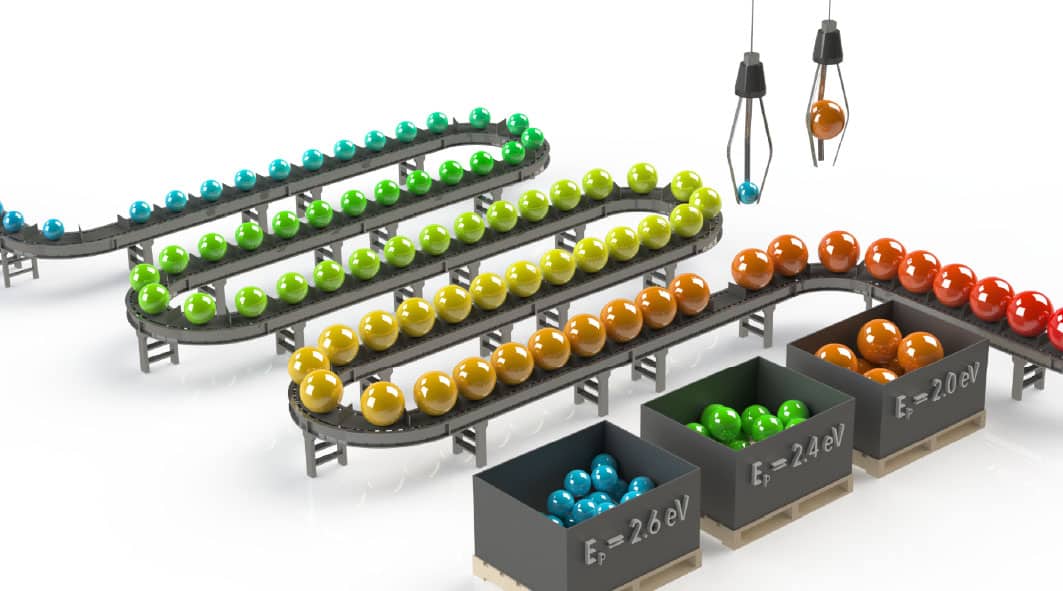

Existing techniques that extract 3D data from 2D images – such as the MonoCon technique developed by Wu and his collaborators – make use of “bounding boxes.” Specifically, these techniques train AI to scan a 2D image and place 3D bounding boxes around objects in the 2D image, such as each car on a street. These boxes are cuboids, which have eight points – think of the corners on a shoebox. The bounding boxes help the AI estimate the dimensions of the objects in an image, and where each object is in relation to other objects. In other words, the bounding boxes can help the AI determine how big a car is, and where it is in relation to the other cars on the road.

However, the bounding boxes of existing programs are imperfect, and often fail to include parts of a vehicle or other object that appears in a 2D image.

The new MonoXiver method uses each bounding box as a starting point, or anchor, and has the AI perform a second analysis of the area surrounding each bounding box. This second analysis results in the program producing many additional bounding boxes surrounding the anchor.

To determine which of these secondary boxes has best captured any “missing” parts of the object, the AI does two comparisons. One comparison looks at the “geometry” of each secondary box to see if it contains shapes that are consistent with the shapes in the anchor box. The other comparison looks at the “appearance” of each secondary box to see if it contains colors or other visual characteristics that are similar to the visual characteristics of what is within the anchor box.

“One significant advance here is that MonoXiver allows us to run this top-down sampling technique – creating and analyzing the secondary bounding boxes – very efficiently,” Wu says.

To measure the accuracy of the MonoXiver method, the researchers tested it using two datasets of 2D images: the well-established KITTI dataset and the more challenging, large-scale Waymo dataset.

“We used the MonoXiver method in conjunction with MonoCon and two other existing programs that are designed to extract 3D data from 2D images, and MonoXiver significantly improved the performance of all three programs,” Wu says. “We got the best performance when using MonoXiver in conjunction with MonoCon.

“It’s also important to note that this improvement comes with relatively minor computational overhead,” Wu says. “For example, MonoCon, by itself, can run at 55 frames per second. That slows down to 40 frames per second when you incorporate the MonoXiver method – which is still fast enough for practical utility.

“We are excited about this work, and will continue to evaluate and fine-tune it for use in autonomous vehicles and other applications,” Wu says.

The paper, “Monocular 3D Object Detection with Bounding Box Denoising in 3D by Perceiver,” will be presented Oct. 4 at the International Conference on Computer Vision in Paris, France. First author of the paper is Xianpeng Liu, a Ph.D. student at NC State. The paper was co-authored by Kelvin Cheng, a former Ph.D. student at NC State; Ce Zheng, of the University of Central Florida; Nan Xue, of Ant Group; and Guo-Jun Qi, of the OPPO Seattle Research Center and Westlake University.

The work was done with support from the U.S. Army Research Office under grants W911NF1810295 and W911NF2210010; and by the National Science Foundation, under grants 1909644, 1822477, 2024688 and 2013451.

-shipman-

Note to Editors: The study abstract follows.

“Monocular 3D Object Detection with Bounding Box Denoising in 3D by Perceiver”

Authors: Xianpeng Liu, Kelvin Cheng and Tianfu Wu, North Carolina State University; Ce Zheng, University of Central Florida; Nan Xue, Ant Group; and Guo-Jun Qi, OPPO Seattle Research Center and Westlake University

Presented: Oct. 4, International Conference on Computer Vision (ICCV23), Paris, France

Abstract: The main challenge of monocular 3D object detection is the accurate localization of 3D center. Motivated by a new and strong observation that this challenge can be remedied by a 3D-space local-grid search scheme in an ideal case, we propose a stage-wise approach, which combines the information flow from 2D-to-3D (3D bounding box proposal generation with a single 2D image) and 3D-to-2D (proposal verification by denoising with 3D-to-2D contexts) in a top-down manner. Specifically, we first obtain initial proposals from off-the-shelf backbone monocular 3D detectors. Then, we generate a 3D anchor space by local-grid sampling from the initial proposals. Finally, we perform 3D bounding box denoising at the 3D-to-2D proposal verification stage. To effectively learn discriminative features for denoising highly overlapped proposals, this paper presents a method of using the Perceiver I/O model to fuse the 3D-to-2D geometric information and the 2D appearance information. With the encoded latent representation of a proposal, the verification head is implemented with a self-attention module. Our method, named as MonoXiver, is generic and can be easily adapted to any backbone monocular 3D detectors. Experimental results on the well-established KITTI dataset and the challenging large-scale Waymo dataset show that MonoXiver consistently achieves improvement with limited computation overhead.