For Immediate Release

Researchers have developed a new training tool to help artificial intelligence (AI) programs better account for the fact that humans don’t always tell the truth when providing personal information. The new tool was developed for use in contexts when humans have an economic incentive to lie, such as applying for a mortgage or trying to lower their insurance premiums.

“AI programs are used in a wide variety of business contexts, such as helping to determine how large of a mortgage an individual can afford, or what an individual’s insurance premiums should be,” says Mehmet Caner, co-author of a paper on the work. “These AI programs generally use mathematical algorithms driven solely by statistics to do their forecasting. But the problem is that this approach creates incentives for people to lie, so that they can get a mortgage, lower their insurance premiums, and so on.

“We wanted to see if there was some way to adjust AI algorithms in order to account for these economic incentives to lie,” says Caner, who is the Thurman-Raytheon Distinguished Professor of Economics in North Carolina State University’s Poole College of Management.

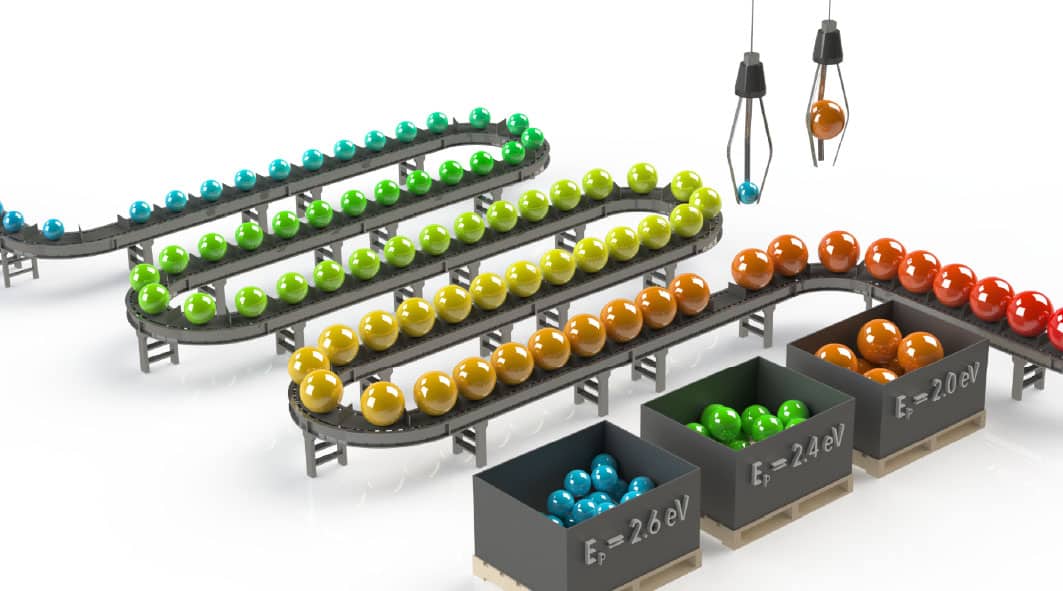

To address this challenge, the researchers developed a new set of training parameters that can be used to inform how the AI teaches itself to make predictions. Specifically, the new training parameters focus on recognizing and accounting for a human user’s economic incentives. In other words, the AI trains itself to recognize circumstances in which a human user might lie to improve their outcomes.

In proof-of-concept simulations, the modified AI was better able to detect inaccurate information from users.

“This effectively reduces a user’s incentive to lie when submitting information,” Caner says. “However, small lies can still go undetected. We need to do some additional work to better understand where the threshold is between a ‘small lie’ and a ‘big lie.’”

The researchers are making the new AI training parameters publicly available, so that AI developers can experiment with them.

“This work shows we can improve AI programs to reduce economic incentives for humans to lie,” Caner says. “At some point, if we make the AI clever enough, we may be able to eliminate those incentives altogether.”

The paper, “Should Humans Lie to Machines? The Incentive Compatibility of Lasso and GLM Structured Sparsity Estimators,” is published in the Journal of Business & Economic Statistics. The paper was co-authored by Kfir Eliaz of Tel-Aviv University and the University of Utah.

-shipman-

Note to Editors: The study abstract follows.

“Should Humans Lie to Machines? The Incentive Compatibility of Lasso and GLM Structured Sparsity Estimators”

Authors: Mehmet Caner, North Carolina State University; and Kfir Eliaz, Tel-Aviv University and the University of Utah

Published: March 12, Journal of Business & Economic Statistics

DOI: 10.1080/07350015.2024.2316102

Abstract: We consider situations where a user feeds her attributes to a machine learning method that tries to predict her best option based on a random sample of other users. The predictor is incentive-compatible if the user has no incentive to misreport her covariates. Focusing on the popular Lasso estimation technique, we borrow tools from high-dimensional statistics to characterize sufficient conditions that ensure that Lasso is incentive compatible in the asymptotic case. We extend our results to a new nonlinear machine learning technique, Generalized Linear Model Structured Sparsity estimators. Our results show that incentive compatibility is achieved if the tuning parameter is kept above some threshold in the case of asymptotics.