Reinforcement Learning Expedites ‘Tuning’ of Robotic Prosthetics

For Immediate Release

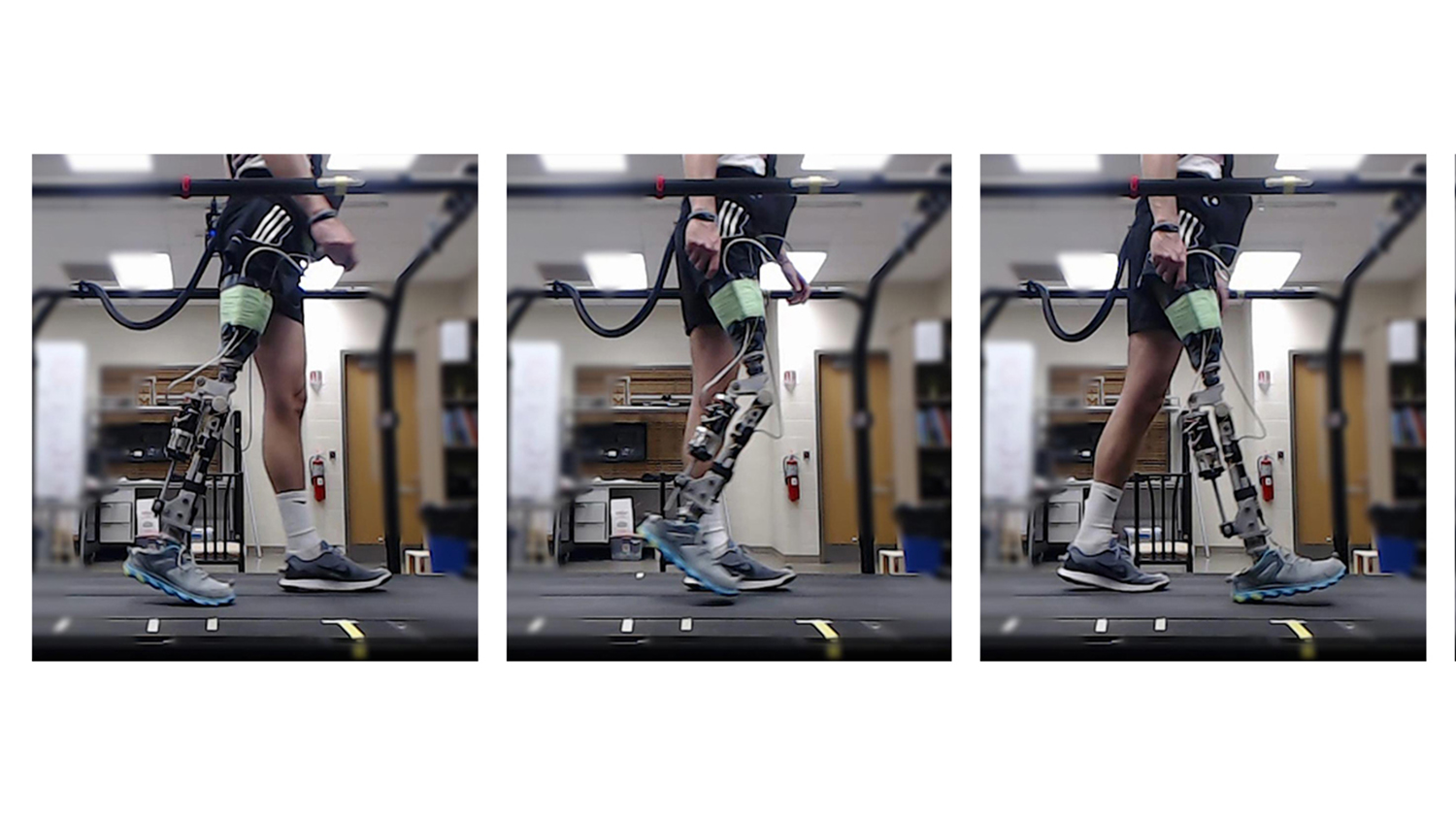

Researchers from North Carolina State University, the University of North Carolina and Arizona State University have developed an intelligent system for “tuning” powered prosthetic knees, allowing patients to walk comfortably with the prosthetic device in minutes, rather than the hours necessary if the device is tuned by a trained clinical practitioner. The system is the first to rely solely on reinforcement learning to tune the robotic prosthesis.

When a patient receives a robotic prosthetic knee, the device needs to be tuned to accommodate that specific patient. The new tuning system tweaks 12 different control parameters, addressing prosthesis dynamics, such as joint stiffness, throughout the entire gait cycle.

Normally, a human practitioner works with the patient to modify a handful of parameters. This can take hours. The new system relies on a computer program that makes use of reinforcement learning to modify all 12 parameters. It allows patients to use a powered prosthetic knee to walk on a level surface in about 10 minutes.

“We begin by giving a patient a powered prosthetic knee with a randomly selected set of parameters,” says Helen Huang, co-author of a paper on the work and a professor in the Joint Department of Biomedical Engineering at NC State and UNC. “We then have the patient begin walking, under controlled circumstances.

“Data on the device and the patient’s gait are collected via a suite of sensors in the device,” Huang says. “A computer model adapts parameters on the device and compares the patient’s gait to the profile of a normal walking gait in real time. The model can tell which parameter settings improve performance and which settings impair performance. Using reinforcement learning, the computational model can quickly identify the set of parameters that allows the patient to walk normally. Existing approaches, relying on trained clinicians, can take half a day.”

While the work is currently done in a controlled, clinical setting, one goal would be to develop a wireless version of the system, which would allow users to continue fine-tuning the powered prosthesis parameters when being used in real-world environments.

“This work was done for scenarios in which a patient is walking on a level surface, but in principle, we could also develop reinforcement learning controllers for situations such as ascending or descending stairs,” says Jennie Si, co-author of the paper and a professor of electrical, computer and energy engineering at ASU.

“I have worked on reinforcement learning from the dynamic system control perspective, which takes into account sensor noise, interference from the environment, and the demand of system safety and stability,” Si says. “I recognized the unprecedented challenge of learning to control, in real time, a prosthetic device that is simultaneously affected by the human user. This is a co-adaptation problem that does not have a readily available solution from either classical control designs or the current, state-of-the-art reinforcement learning controlled robots. We are thrilled to find out that our reinforcement learning control algorithm actually did learn to make the prosthetic device work as part of a human body in such an exciting applications setting.”

Huang says researchers hope to make the process even more efficient. “For example, we think we may be able to improve the process by identifying combinations of parameters that are more or less likely to succeed, and training the model to focus first on the most promising parameter settings.”

The researchers note that, while this work is promising, many questions need to be addressed before it is available for widespread use.

“For example, the prosthesis tuning goal in this study is to meet normative knee motion in walking,” Huang says. “We did not consider other gait performance (such as gait symmetry) or the user’s preference. For another example, our tuning method can be used to fine-tune the device outside of the clinics and labs to make the system adaptive over time with the user’s need. However, we need to ensure the safety in real-world use since errors in control might lead to stumbling and falls. Additional testing is needed to show safety.”

The researchers also note that, if the system does prove to be effective and enter widespread use, it would likely reduce costs for patients by limiting the need for patients to make clinical visits to work with practitioners.

The paper, “Online Reinforcement Learning Control for the Personalization of a Robotic Knee Prosthesis,” is published in the journal IEEE Transactions on Cybernetics. First author of the paper is Yue Wen, a Ph.D. biomedical engineering student at NC State and UNC. Additional co-authors include Andrea Brandt, a Ph.D. biomedical engineering student at NC State and UNC; and Xiang Gao, a Ph.D. student at ASU.

The work was done with support from the National Science Foundation under grant numbers 1563454, 1563921, 1808752 and 1808898.

-shipman-

Note to Editors: The study abstract follows.

“Online Reinforcement Learning Control for the Personalization of a Robotic Knee Prosthesis”

Authors: Yue Wen, Andrea Brandt and He (Helen) Huang, Joint Department of Biomedical Engineering at North Carolina State University and the University of North Carolina at Chapel Hill; Jennie Si and Xiang Gao, Arizona State University

Published: Jan. 16, IEEE Transactions on Cybernetics

DOI: 10.1109/TCYB.2019.2890974

Abstract: Robotic prostheses deliver greater function than passive prostheses, but we face the challenge of tuning a large number of control parameters in order to personalize the device for individual amputee users. This problem is not easily solved by traditional control designs or the latest robotic technology. Reinforcement learning (RL) is naturally appealing. The recent, unprecedented success of AlphaZero demonstrated RL as feasible, large-scale problem solver. However, the prosthesis-tuning problem is associated with several unaddressed issues such as that it does not have a known and stable model, the continuous states and controls of the problem may result in a curse of dimensionality, and the human-prosthesis system is constantly subject to measurement noise, environment change, and human body caused variations. In this study, we demonstrated the feasibility of direct Heuristic Dynamic Programming (dHDP), an approximate dynamic programming (ADP) approach, to automatically tune the 12 robotic knee prosthesis parameters to meet individual human users’ needs. We tested the ADP-tuner on two subjects (one able-bodied subject and one amputee subject) walking at a fixed speed on a treadmill. The ADP-tuner learned to reach target gait kinematics in an average of 300 gait cycles or 10 minutes of walking. We observed improved ADP tuning performance when we transferred a previously-learned ADP controller to a new learning session with the same subject. To the best of our knowledge, our approach to personalize robotic prostheses is the first implementation of online ADP learning control to a clinical problem involving human subjects.

- Categories: