Researchers Fine-Tune Control Over AI Image Generation

For Immediate Release

Researchers from North Carolina State University have developed a new state-of-the-art method for controlling how artificial intelligence (AI) systems create images. The work has applications for fields from autonomous robotics to AI training.

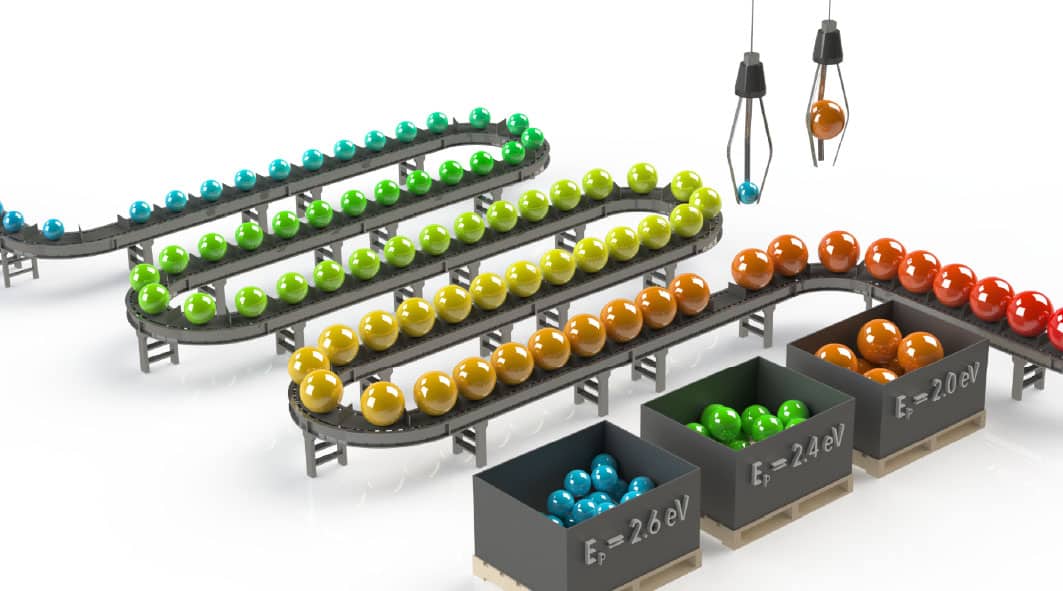

At issue is a type of AI task called conditional image generation, in which AI systems create images that meet a specific set of conditions. For example, a system could be trained to create original images of cats or dogs, depending on which animal the user requested. More recent techniques have built on this to incorporate conditions regarding an image layout. This allows users to specify which types of objects they want to appear in particular places on the screen. For example, the sky might go in one box, a tree might be in another box, a stream might be in a separate box, and so on.

The new work builds on those techniques to give users more control over the resulting images, and to retain certain characteristics across a series of images.

“Our approach is highly reconfigurable,” says Tianfu Wu, co-author of a paper on the work and an assistant professor of computer engineering at NC State. “Like previous approaches, ours allows users to have the system generate an image based on a specific set of conditions. But ours also allows you to retain that image and add to it. For example, users could have the AI create a mountain scene. The users could then have the system add skiers to that scene.”

In addition, the new approach allows users to have the AI manipulate specific elements so that they are identifiably the same, but have moved or changed in some way. For example, the AI might create a series of images showing skiers turn toward the viewer as they move across the landscape.

“One application for this would be to help autonomous robots ‘imagine’ what the end result might look like before they begin a given task,” Wu says. “You could also use the system to generate images for AI training. So, instead of compiling images from external sources, you could use this system to create images for training other AI systems.”

The researchers tested their new approach using the COCO-Stuff dataset and the Visual Genome dataset. Based on standard measures of image quality, the new approach outperformed the previous state-of-the-art image creation techniques.

“Our next step is to see if we can extend this work to video and three-dimensional images,” Wu says.

Training for the new approach requires a fair amount of computational power; the researchers used a 4-GPU workstation. However, deploying the system is less computationally expensive.

“We found that one GPU gives you almost real-time speed,” Wu says.

“In addition to our paper, we’ve made our source code for this approach available on GitHub. That said, we’re always open to collaborating with industry partners.”

The paper, “Learning Layout and Style Reconfigurable GANs for Controllable Image Synthesis,” is published in the journal IEEE Transactions on Pattern Analysis and Machine Intelligence. First author of the paper is Wei Sun, a recent Ph.D. graduate from NC State.

The work was supported by the National Science Foundation, under grants 1909644, 1822477, 2024688 and 2013451; by the U.S. Army Research Office, under grant W911NF1810295; and by the Administration for Community Living, under grant 90IFDV0017-01-00.

-shipman-

Note to Editors: The study abstract follows.

“Learning Layout and Style Reconfigurable GANs for Controllable Image Synthesis”

Authors: Wei Sun and Tianfu Wu, North Carolina State University

Published: May 10, IEEE Transactions on Pattern Analysis and Machine Intelligence

DOI: 10.1109/TPAMI.2021.3078577

Abstract: With the remarkable recent progress on learning deep generative models, it becomes increasingly interesting to develop models for controllable image synthesis from reconfigurable structured inputs. This paper focuses on a recently emerged task, layout-to-image, whose goal is to learn generative models for synthesizing photo-realistic images from a spatial layout (i.e., object bounding boxes configured in an image lattice) and its style codes (i.e., structural and appearance variations encoded by latent vectors). This paper first proposes an intuitive paradigm for the task, layout-to-mask-to-image, which learns to unfold object masks in a weakly-supervised way based on an input layout and object style codes. The layout-to-mask component deeply interacts with layers in the generator network to bridge the gap between an input layout and synthesized images. Then, this paper presents a method built on Generative Adversarial Networks (GANs) for the proposed layout-to-mask-to-image synthesis with layout and style control at both image and object levels. The controllability is realized by a proposed novel Instance-Sensitive and Layout-Aware Normalization (ISLA-Norm) scheme. A layout semi-supervised version of the proposed method is further developed without sacrificing performance. In experiments, the proposed method is tested in the COCO-Stuff dataset and the Visual Genome dataset with state-of-the-art performance obtained.