Algorithm Makes Hyperspectral Imaging Faster

For Immediate Release

Researchers from North Carolina State University and the University of Delaware have developed an algorithm that can quickly and accurately reconstruct hyperspectral images using less data. The images are created using instruments that capture hyperspectral information succinctly, and the combination of algorithm and hardware makes it possible to acquire hyperspectral images in less time and to store those images using less memory.

Hyperspectral imaging holds promise for use in fields ranging from security and defense to environmental monitoring and agriculture.

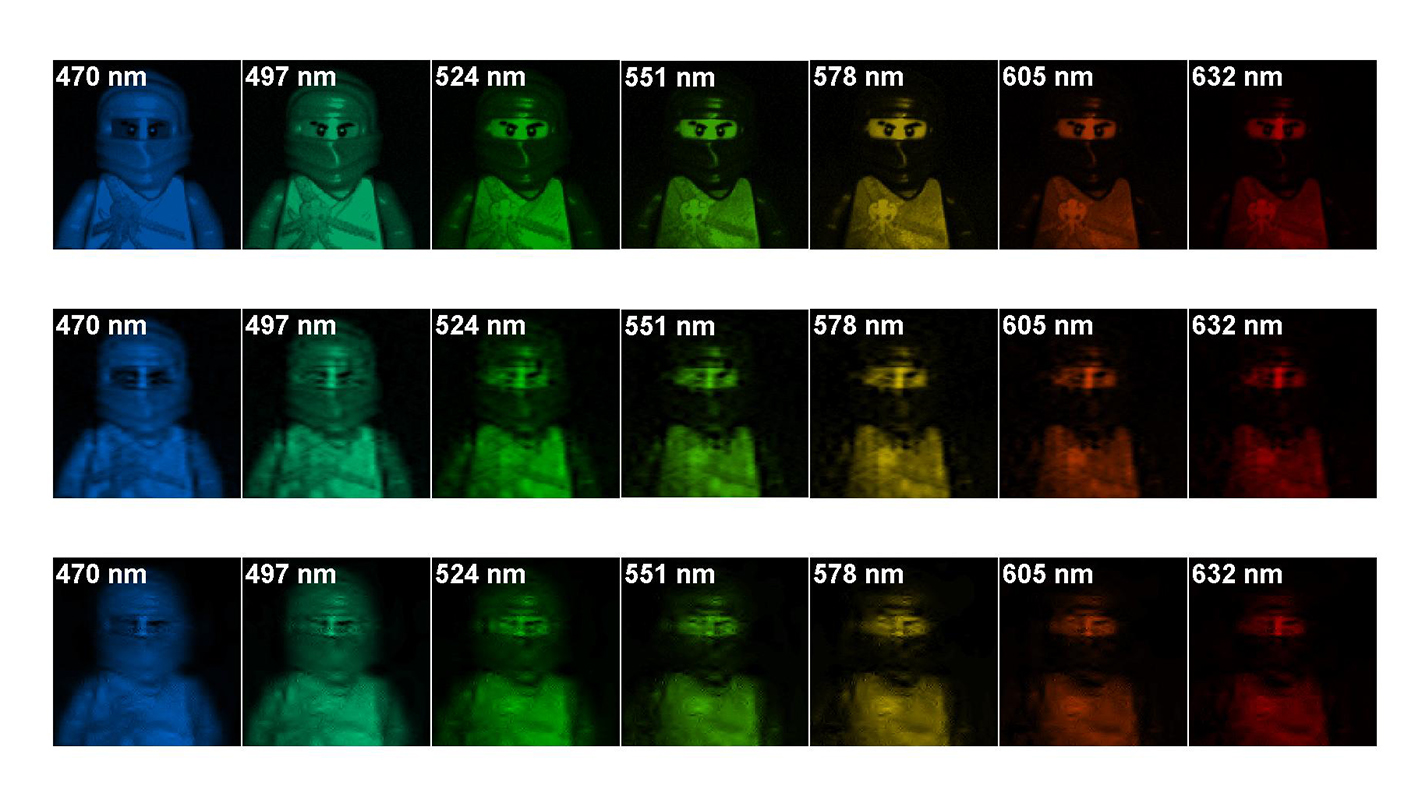

Conventional imaging techniques, such as digital photography, capture images across only three wavelengths – or frequencies – of light, from blue to green to red. Hyperspectral imaging creates images across dozens or hundreds of wavelengths. These images can be used to determine the materials found in whatever scene was imaged – sort of like spectroscopy done at a distance.

But the technique does face some challenges.

For example, in a conventional imaging system, if an image has millions of pixels across three wavelengths, the image file might be one megabyte. But in hyperspectral imaging, the image file could be at least an order of magnitude larger. This can create problems for storing and transmitting data.

In addition, capturing hyperspectral images across dozens of wavelengths can be time-consuming – with the conventional imaging technology taking a series of images, each capturing a different suite of wavelengths, and then combining them.

“It can take minutes,” says Dror Baron, an assistant professor of electrical and computer engineering at NC State and one of the senior authors of a paper describing the new algorithm.

In recent years, researchers have developed new hyperspectral imaging hardware that can acquire the necessary images more quickly and store the images using significantly less memory. The hardware takes advantage of “compressive measurements,” which mix spatial and wavelength data in a format that can be used later to reconstruct the complete hyperspectral image.

But in order for the new hardware to work effectively, you need an algorithm that can reconstruct the image accurately and quickly. And that’s what researchers at NC State and Delaware have developed.

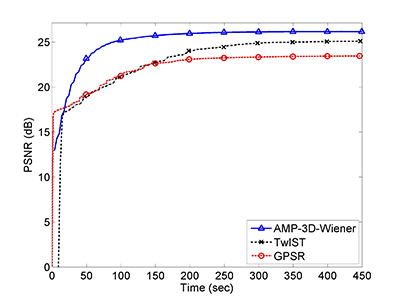

In model testing, the new algorithm significantly outperformed existing algorithms at every frequency.

“We were able to reconstruct image quality in 100 seconds of computation that other algorithms couldn’t match in 450 seconds,” Baron says. “And we’re confident that we can bring that computational time down even further.”

The higher quality of the image reconstruction means that fewer measurements need to be acquired and processed by the hardware, speeding up the imaging time. And fewer measurements mean less data that needs to be stored and transmitted.

“Our next step is to run the algorithm in a real world system to gain insights into how the algorithm functions and identify potential room for improvement,” Baron says. “We’re also considering how we could modify both the algorithm and the hardware to better compliment each other.”

The paper, “Compressive Hyperspectral Imaging via Approximate Message Passing,” is published online in the IEEE Journal of Selected Topics in Signal Processing. Lead author of the paper is Jin Tan, a former Ph.D. student at NC State. The paper was co-authored by Yanting Ma of NC State and Hoover Rueda and Gonzalo Arce of Delaware. The work was supported by the National Science Foundation under grant number CCF-1217749 and by the U.S. Army Research Office under grants W911NF-14-1-0314 and W911NF-12-1-0380.

-shipman-

Note to Editors: The study abstract follows.

“Compressive Hyperspectral Imaging via Approximate Message Passing”

Authors: Jin Tan, Yanting Ma, and Dror Baron, North Carolina State University; Hoover Rueda and Gonzalo R. Arce, University of Delaware

Published: Feb. 12, IEEE Journal of Selected Topics in Signal Processing

DOI: 10.1109/JSTSP.2015.2500190

Abstract: We consider a compressive hyperspectral imaging reconstruction problem, where three-dimensional spatio-spectral information about a scene is sensed by a coded aperture snapshot spectral imager (CASSI). The CASSI imaging process can be modeled as suppressing three-dimensional coded and shifted voxels and projecting these onto a two-dimensional plane, such that the number of acquired measurements is greatly reduced. On the other hand, because the measurements are highly compressive, the reconstruction process becomes challenging. We previously proposed a compressive imaging reconstruction algorithm that is applied to two-dimensional images based on the approximate message passing (AMP) framework. AMP is an iterative algorithm that can be used in signal and image reconstruction by performing denoising at each iteration. We employed an adaptive Wiener filter as the image denoiser, and called our algorithm “AMP-Wiener.” In this paper, we extend AMP-Wiener to three-dimensional hyperspectral image reconstruction, and call it “AMP-3D-Wiener.” Applying the AMP framework to the CASSI system is challenging, because the matrix that models the CASSI system is highly sparse, and such a matrix is not suitable to AMP and makes it difficult for AMP to converge. Therefore, we modify the adaptive Wiener filter and employ a technique called damping to solve for the divergence issue of AMP. Our simulation results show that AMP-3D-Wiener outperforms existing widely-used algorithms such as gradient projection for sparse reconstruction (GPSR) and two step iterative shrinkage/thresholding (TwIST) given a similar amount of runtime. Moreover, in contrast to GPSR and TwIST, AMP-3D-Wiener need not tune any parameters, which simplifies the reconstruction process.

- Categories: